Organisations currently find themselves at a crossroads. There has been a burst of energy as businesses rush to take advantage of the opportunities AI solutions offer. Alongside this, macro-economic pressures are pushing more organisations to consider whether AI can solve their People Function and workforce challenges. This poses a unique challenge – how can you sustainably test and roll out your AI strategy while balancing efficiency and supporting your employees through AI-driven change?

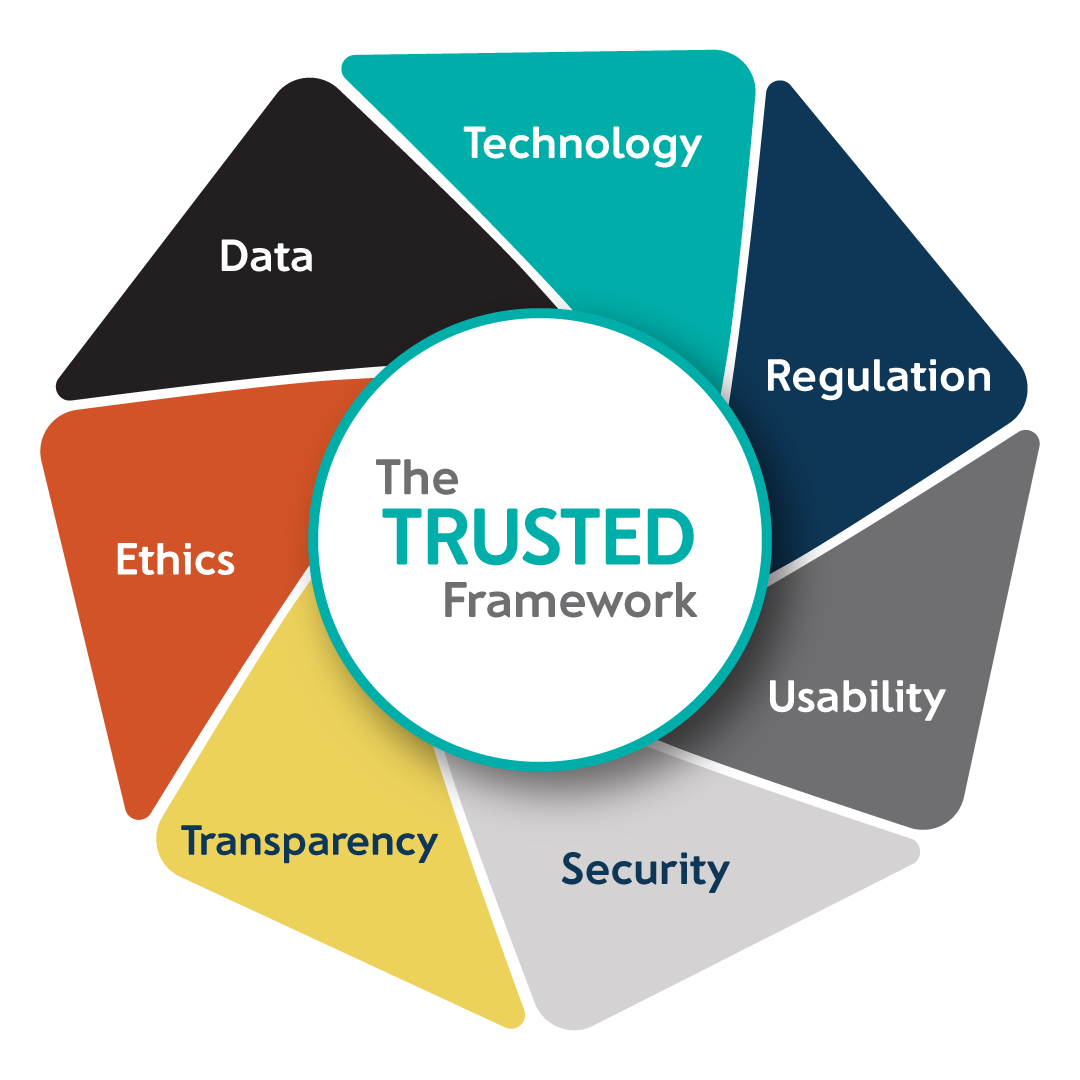

To help People Function leaders achieve this balance, we have developed the TRUSTED Framework as part of our AI services, which covers our seven key considerations for AI adoption.

In this blog, we unpack each element of our TRUSTED Framework in detail to guide responsible, employee-centric AI implementation.

Technology

What technology foundations are needed for AI in HR?

Laying the groundwork for AI readiness

Before embarking on any AI implementation, organisations must assess whether their existing technology landscape can support it – both in the present day, and in the years ahead as AI capabilities evolve. This means evaluating infrastructure, integration points, and vendor capabilities across HCM, ATS, or talent solutions.

While AI functionality can be implemented relatively easily into these platforms, such quick wins should be balanced with a long-term view of how AI fits into the broader employee experience. A fragmented approach – such as deploying isolated chatbots across disconnected systems – can undermine adoption efforts.

Building scalable and responsive architecture

Organisations must ensure their tech roadmap is flexible enough to accommodate emerging AI use cases, from generative agents to predictive analytics. This includes understanding how AI will interact with existing data flows, APIs, and governance layers.

By design, a scalable architecture for AI tools where applications are divided into simple, easy-to-build-upon functions can allow businesses to experiment, learn, and iterate without locking themselves into rigid structures. By treating technology as a foundation rather than a finish line, organisations can future-proof their AI strategy and support meaningful transformation.

Where to get started:

- Assess your current technology landscape for AI readiness; review infrastructure, integration points, and vendor capabilities across HR platforms.

- Avoid fragmented AI deployments and focus on building a cohesive, long-term strategy that enhances the overall employee experience.

- Design a scalable and flexible architecture to ensure your tech roadmap can accommodate emerging AI use cases and future growth.

Regulation

What regulations should HR leaders consider when adopting AI?

Navigating the evolving regulatory landscape around AI

As AI becomes more embedded in HR processes, the regulatory landscape is rapidly shifting to keep pace. Organisations must stay ahead of emerging legislation such as the EU AI Act to ensure compliance and avoid costly missteps. These regulations are not just legal formalities –they are designed to safeguard employee rights and promote transparency and ethical use of AI across recruitment, performance management, and other sensitive HR functions.

Research from EY suggests the most common AI risks to be non-compliance with new laws (57%), negative impacts to sustainability goals (55%) and biased outputs (53%). As such, regulatory awareness is no longer optional – it is essential.

Governance and accountability in AI deployment

Effective regulation starts with strong internal governance. A McKinsey survey found that only 18% of AI-focused organisations possessed councils or boards with the authority to make decisions on responsible AI governance. Organisations must establish cross-functional oversight, bringing together HR, legal, IT, and compliance teams to assess risk, define responsible use, and ensure AI systems champion fairness.

Employees must be informed about how AI is used and how their data is handled, with clear channels for feedback and redress. By embedding regulatory thinking into AI strategy, organisations can build trust, mitigate risk, and foster a culture of respect and integrity.

Where to get started:

- Stay informed about evolving legislation and ensure compliance to safeguard employee rights and avoid costly missteps.

- Create cross-functional oversight involving HR, legal, IT, and compliance to assess risk and define responsible AI use.

- Communicate clearly with employees about how AI is used and how their data is handled while providing channels for feedback.

Usability

How can HR ensure AI tools are user-friendly and improve employee experience?

Putting employee experience at the heart of AI adoption

Usability in AI is about more than just a slick user interface – it is about how employees interact with new tools and how those tools fit into their daily job responsibilities. Organisations must consider the practical impacts of AI enablement on the end user, ensuring that solutions are intuitive and genuinely improve workflows.

Research from Gartner indicated that only 42% of employees know how to identify where AI can improve their work – if employees find AI tools confusing or disconnected from their actual needs, anticipated benefits will not materialise. This shows how it is essential to design AI experiences that are intuitive, supportive, and tailored to the unique roles within a business.

Driving AI adoption through meaningful interaction

Successful AI implementation hinges on employee engagement and interaction. It is not enough to simply deploy new technology, organisations must understand how different teams and roles will use AI and actively seek feedback to refine the experience.

Furthermore, first impressions are crucial when embracing new tools – an overcomplicated interface at launch can severely bottleneck future growth. Adoption rates, including measuring the number of teams using new tools and the number of repeat users, should be tracked as a measure of success, with continuous improvements made based on user input. By focusing on usability, organisations can make AI a valued part of the employee experience, driving both efficiency and satisfaction.

Where to get started:

- Prioritise employee experience by ensuring AI tools are intuitive, supportive, and tailored to specific roles and workflows.

- Involve employees in the design and rollout of AI solutions and gather feedback to improve usability and encourage adoption.

- Track adoption metrics and continuously improve based on user input.

Security

How can organisations keep employee data secure when using AI?

Safeguarding data in an AI-driven world

Security remains a foundational concern as organisations integrate AI into HR processes. With sensitive employee data at stake – particularly in HR – it is essential to have robust measures in place to protect privacy and comply with regulations such as GDPR and the EU AI Act, as the consequences for not doing so can be very costly. Organisations must prioritise the security of their internal systems while also critiquing how data is managed when combined with external sources or third-party AI applications.

The risks of data breaches or unauthorised access are heightened in an AI context – research from McKinsey found that around 80% of organisations have encountered risky behaviour from AI agents, such as improper data exposure and access to systems without authorisation. This calls for proactive – rather than reactive – security strategies.

Collaboration and governance for secure AI

Effective security requires collaboration between HR, IT, and other key stakeholders. Siloed thinking can leave gaps in protection – although easier said than done, it is vital to establish clear governance structures that span departments and are spearheaded by leadership.

Regular reviews of security protocols, ongoing training, and transparent communication about data usage can help build trust and ensure compliance. By championing cross-functional collaboration to enable security, organisations can confidently leverage AI while safeguarding their workforce’s most valuable information.

Where to get started:

- Implement robust security measures to protect sensitive employee data, complying with regulations like GDPR and the EU AI Act.

- Evaluate how data is managed, especially when integrating external sources or third-party AI applications.

- Provide ongoing training and transparent communication about data usage to build trust.

Transparency

Why is transparency important in AI-driven HR processes?

Building trust through openness and clarity

Transparency is critical for building trust in AI systems. A University of Portsmouth study found that around 60% of employees were in favour of AI making managerial decisions involving pay and promotions, especially for its potential in making unbiased decisions. However, this enthusiasm did not lead to wider acceptance due to unclear explanations on the decision criteria those AI systems used (CIPD).

Employees want to understand how AI tools work, how decisions are made, and how their data is used. Organisations should be open about the testing and development of AI solutions, providing clear explanations of how outcomes are generated and why they matter. This openness can help demystify AI, reducing resistance and encouraging wider adoption

Ensuring reliability and accountability

Beyond just being easier to trust, transparent AI systems also promote accountability. Organisations should regularly review AI outputs for accuracy and fairness, sharing these findings with employees to demonstrate reliability. When errors or biases occur, a transparent approach can enable swift correction and continuous improvement. Framing testing as a necessary part of the deployment journey can create a culture where failure becomes an opportunity for collective learning.

By prioritising transparency, organisations can create a culture where AI grows beyond a mysterious entity to become a genuine supportive tool that employees understand.

Where to get started:

- Be open about how AI tools work, how decisions are made, and how employee data is used.

- Share clear explanations of AI outcomes and decision criteria to demystify AI.

- Regularly review AI outputs for accuracy and fairness, sharing findings with employees to demonstrate reliability.

Ethics

What ethical principles should guide AI adoption in HR?

Immersing ethical principles in AI deployment

Ethical considerations must function as the primary guiding principle behind AI use, particularly in an HR context. An IBM survey found that only around 40% of employees trust organisations to be responsible and ethical in their use of AI. To reduce this apprehension, organisations must assess how AI impacts employees – from job role changes and competency training to career progression and workplace fairness.

AI tools should be designed and used exclusively in ways that align with company values – this can involve avoiding bias in decision-making, ensuring explainability of outputs, and being transparent about how AI systems are trained and validated. Done right, ethical AI can foster a culture of integrity and help build trust between employees and organisations.

What is HR's role in shaping responsible AI use?

HR is uniquely positioned to lead the ethical oversight of AI. Rather than leaving this responsibility solely to technical teams, HR can help define what ethical AI looks like in practice – validating outputs, ensuring fairness, and supporting employees through AI-driven change.

The AI revolution also provides a broader opportunity to rethink roles and skills – as AI automates manual tasks, HR professionals can pivot toward specialising in governance and oversight. This can help organisations use technology to enhance the human experience at work without undermining it.

Where to get started:

- Align AI tools and processes with company values; actively avoid bias, ensure explainability, and be transparent about training and validation.

- Assess the impact of AI on employees, including job roles, training needs, and career progression.

- HR should take the lead in overseeing AI ethics, reviewing outcomes, and supporting employees as they adapt to AI-driven change.

Data

Why is strong data governance critical for AI implementation?

Maximising value while protecting privacy

Data is the foundation behind AI, as any AI system is only as good as the dataset it is trained on. AI relies on data to deliver insights and drive automation, but the quality and governance of that data are critical. HR manages sensitive employee and company information – combining external datasets into this mix can introduce additional risk to a delicate equilibrium that cannot afford any disturbances.

According to Gartner, nearly 90% of HR leaders agree that poor integration has led to minimal value gains from AI tools – consequently, organisations must ensure that data used in AI systems is accurate, consistent, relevant, and securely managed with clear boundaries around usage. Poor data quality or unclear sourcing can lead to unreliable outputs, eroding trust and potentially introducing bias.

Building a foundation for sustainable AI

Responsible data management is essential for long-term AI. Our data and analytics team strongly believes that organisations must invest in improving data quality, establish clear governance protocols, and ensure compliance with regulatory changes.

Employees should be informed about how their data is used and given opportunities to provide feedback or raise concerns. By treating data as a strategic asset that must be protected and respected, organisations can unlock the full potential of AI while maintaining employee trust and regulatory compliance.

Where to get started:

- Invest in improving data quality, establishing clear governance protocols, and ensuring compliance with regulatory changes.

- Ensure data used in AI systems is accurate, consistent, relevant, and securely managed with clear boundaries.

- Treat data as a strategic asset – protect and respect it to unlock AI’s full potential while maintaining trust and compliance.

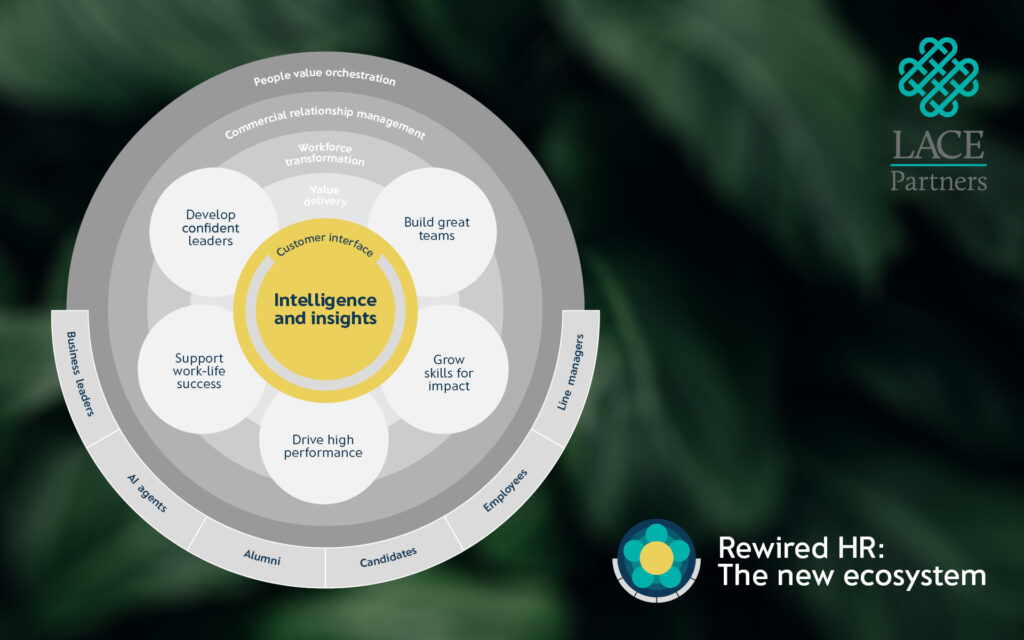

How is HR using AI so far?

As organisations continue to explore AI’s use-cases in HR and beyond, the TRUSTED Framework offers a practical lens to assess readiness and guide responsible implementation. We’re working with our clients to implement their AI strategies and have found AI has already begun to transform HR.

As supported by Gartner research, appetite for AI implementation is increasing with 61% of HR leaders were actively planning or deploying generative AI by 2025, up from just 19% in mid-2023. From streamlining recruitment and performance management, to predicting workforce trends and automating routine tasks, AI’s potential impact is far-reaching. AI-powered tools can uncover hidden patterns in people data, recommend personalised learning programmes, and free up HR teams to focus on strategic initiatives. However, while we’re aware of the ways that AI can enhance processes, the human touch remains vital for building meaningful relationships and fostering a positive workplace culture.

Need support with your AI strategy? We’re here to help, whether that’s through helping you understand opportunities for AI across your business, to building an AI roadmap with suggested use cases. Learn more about our AI services or reach out via the form below to have a chat with the team.